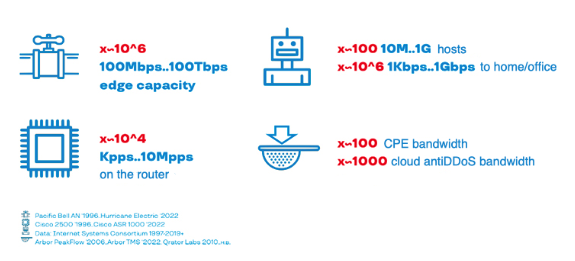

What units can we use to measure a DDOS attack? Bits per second, queries, packets, downtime periods, the number of machines in the botnet. All these answers are correct because DDoS attacks come in different categories, each having its own essential metrics. Growth of these metrics is the driving force for the evolution of DDoS attacks.

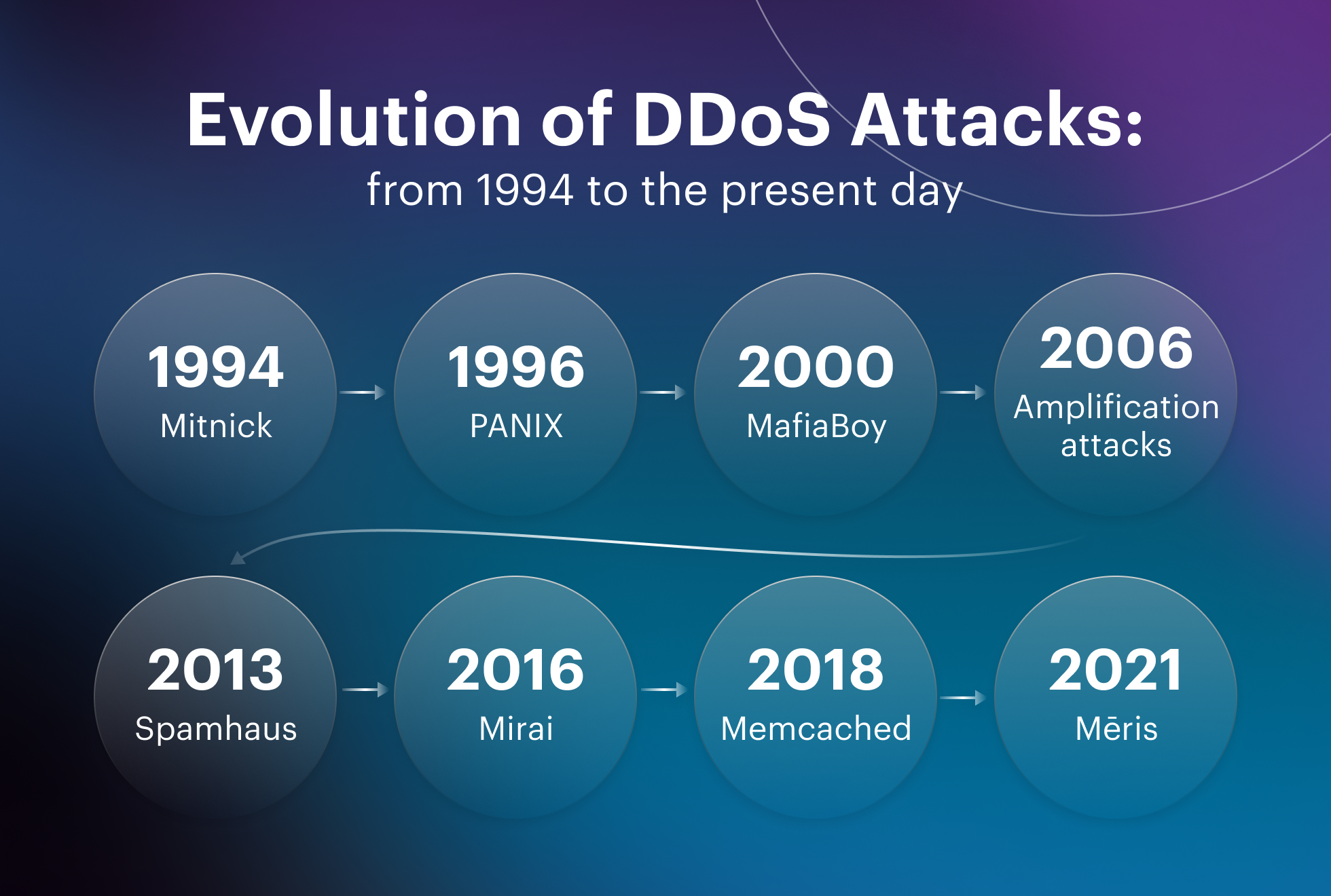

Let’s see how distributed denial of service (DDoS) attacks have developed over the years, what they have developed into and what is specifically worth our attention.

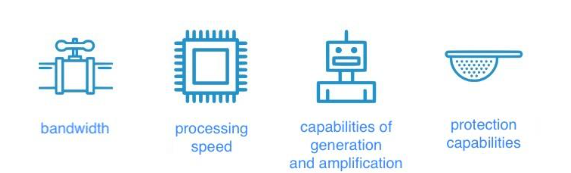

If you consider evolution in nature, it is driven by such forces as struggle for resources, inheritance, mutations and natural selection. DDoS attacks have their own evolution and the forces that drive them:

Bandwidth

For broadband attacks that generate a lot of traffic, the key driving force is the increase of the channel capacity of Internet service providers. It determines how many bands of garbage traffic can be delivered to its victim.

Processing speed

For attacks that generate a large number of packets per second — these are, first of all, floods at the transport level: TCP SYN Flood, SYN+ACK Flood, TAC Flood, SYN+ACK amplification – the key is how many packets the routing device can handle en route.

For application-layer attacks, where the cost of generating attack units is quite high, this force is requests per second. They determine how many requests per second will be generated by one machine in the botnet, and how many simultaneously open connections it is able to support during the selected time.

Generation and amplification capabilities

The number of servers that can amplify DDoS traffic serves as a driving force for the development of amplification attacks. Most of them use application protocols on top of UDP.

Means of protection

Means of protection against DDoS attacks are also developing and participating in the ‘arms race’. Thus, they are the driving force for the attackers who have to outgame them.

RFC (new protocols, implementation mechanisms)

New protocols and network mechanisms give rise to new types of attacks that exploit the design or specific implementation of a new protocol. For example, routers of a specific vendor and/or mass user. In addition, old methods of attacks can be adapted to new protocols.

To understand what these driving forces lead to, let's look at how they have evolved since the 90s to this day.

In such conditions, there is no need to talk about competition for the nutrient resource – everyone gets along fine. Love reigns and new methods live in peace with the old ones.

Let's talk first about the oldest methods.

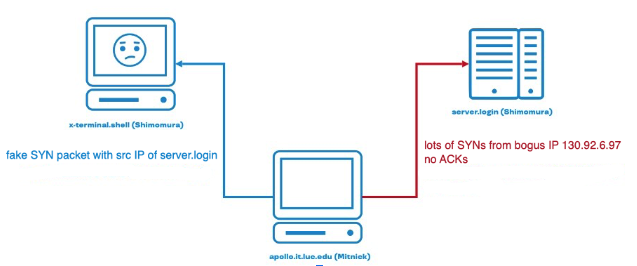

Let us review one remarkable episode in the career of the famous hacker Kevin Mitnick.

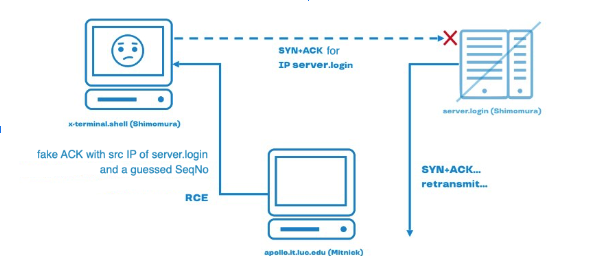

Mitnick tried to gain access (unauthorized, of course) to a machine that belonged to his long-time opponent Tsutomu Shimomura. For that purpose, he hacked a host in the laboratory network and posed as a login server from which there was a trusted login to the desired terminal.

The problem is that if you spoof the connection, pretending to be someone else's host, you need to somehow take the real host offline so that it can't say anything. To do this, Mitnick generated a large number of SYN packets from the hacked machine, sent them with an indication of someone else's valid but unused spoofed IP address from the same network.

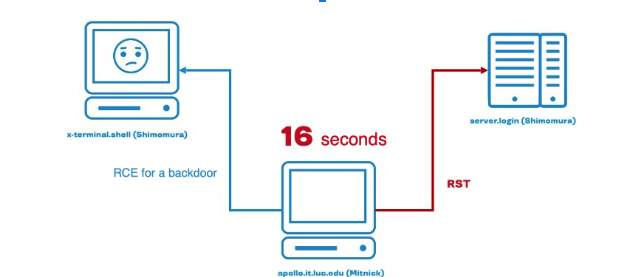

Server.login routinely accepted all the packets into the queue of half-open connections, filled up the queue and sent all the SYN-ACKs to a non-existent machine. So, when Mitnick successfully spoofed the connection, posing as a login server, the login server could not say anything to the terminal that sent a SYN-ACK packet, since the login server was already swamped. As a result, Mitnick established a TCP connection, received a shell, planted a backdoor there, closed the connection and sent a reset to the login server. The entire attack, according to the logs, lasted 16 seconds.

Mitnick’s attack is a correct example of a SYN flood — one of the oldest cases of a DoS attack, at least of those described on paper. Back then it was not yet a distributed attack, but a denial of service from a single machine. Later on, a detailed analysis of this situation appeared in the mailing lists, with logs and other things: first Shimomura's book came out, then Mitnick's. This is how people who were interested in the Internet and technology came to know that such an attack was possible.

The beginning of the main period falls on the years when DDoS attacks, which today have become common and are something that we can live with, emerged, evolved and garnered a lot of public attention.

1996: PANIX SYN flood

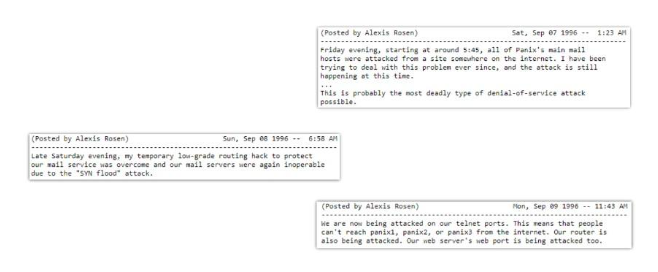

Just a couple of years after the SYN flood, users of one of the world's oldest providers, New York-based PANIX, discovered that their mail servers were down. Spammers had thousands of emails sent through them, using SYN flood from several hacked machines. PANIX’s administrator on duty reported the attack in a meme-worthy fashion: “It is a new threat that might have no solution”.

After a day and a half of downtime, they realized that they were dealing with a distributed denial of service attack that used SYN packets generated from hacked machines.

The reaction of the mass media was reserved and only a few industry journals covered the story. However, this was nearly the first time that someone had written anything at all on the topic outside the industry-specific mailing list.

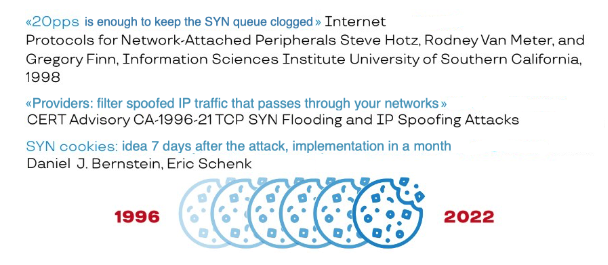

The reaction of the professional community was a little more exciting. Two years later, research was conducted that revealed this: 20 packets per second is enough for an average server to keep the queue of half-open connections (SYN queue) completely clogged, after which the server will not be able to accept any other incoming TCP connection.

Internet Protocols for Network-Attached Peripherals Steve Hotz, Rodney Van Meter, and Gregory Finn, Information Sciences Institute University of Southern California, 1998

20 packets per second is not a lot even by the then standards, especially if they are generated by several machines. So we don't know how much traffic was generated in the first historical DDoS attack on PANIX, but obviously it did not take a lot of packets.

The expert community (CERT Response Center) carefully considered the causes and symptoms of the problem and issued expert recommendations of a very generalized character: “for all the good things, against the bad”.

“Attention Providers: filter traffic from spoofed IP-addresses going through your networks”

CERT Advisory CA-1996-21 TCP SYN Flooding and IP Spoofing Attacks

That is, the providers are urged to prevent the incoming and outgoing spoofed IP traffic from penetrating their networks. If it's spoofed, it's an attack. The idea is wonderful! But how is that done?

There is a set of tips. But now it’s obvious that none of the CERT recommendations (fully and partially) were implemented. Fighting against DDoS attacks by reasoning with Internet providers, hosters and transit networks has not proven to be effective.

Practical approaches to solving the issue have been more successful. For example, seven days after the attack on PANIX, Daniel Bernstein and Eric Schenck discussed and came up with a new method how to deal with initiated TCP connections floods. The mechanism was called SYN cookies. A month later, the first implementations of this mechanism appeared for server OSs that were popular at the time. To get an idea of how successful the technology turned out to be, take any DDoS protection tool (box, operator protection, cloud providers or software installed on load balancers). Almost everywhere the SYN cookies mechanism is implemented to filter invalid incoming SYN packets from non-existent or non-subscriber IP addresses. That’s a super-ager method!

2000: MafiaBoy kills top sites

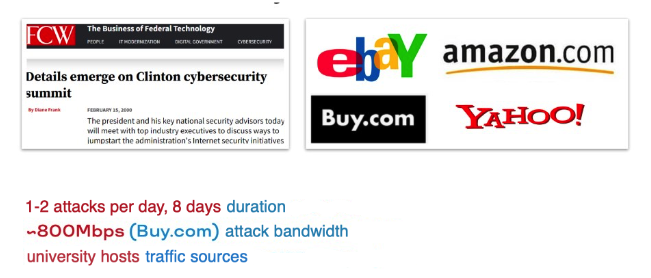

While the attack on PANIX did not make a big splash in the global community, as few as four years later a young 15-year-old hacker by the nickname of MafiaBoy did it big time. Using the same method of attack, “well” proven by that time, he knocked off all the large .com sites that existed at that time:

History has preserved very few technical details:

The hacker's name was Michael Calce. He was not much into secrecy, left traces everywhere, and liked to brag about his achievements. Well, he ended up in prison. But MafiaBoy did not just go to jail, he went there because a few hours after the first attack, President Clinton convened a summit.

Dedicated to cybersecurity, the Summit resolved that something should be done about DDoS actors – they should be found, charged and put in jail. That's exactly what happened.

This episode can be considered a starting point for the prosecution by law enforcement agencies of those who organize DDoS attacks.

2006: Amplification attacks

Amplification became a completely new way of exploiting hosts to organize DDoS attacks.

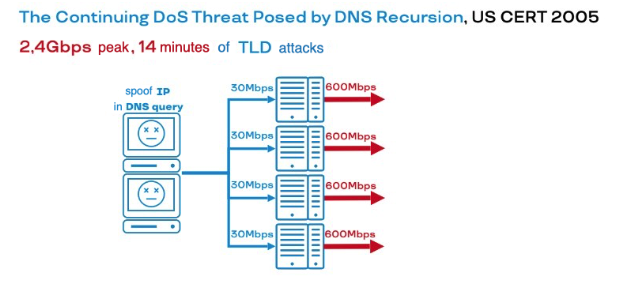

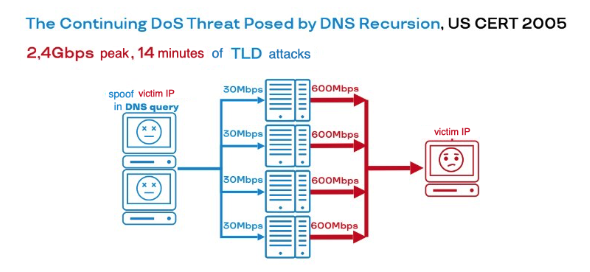

The expert community, having had time to prepare and study possible vectors of such activity, learned about this method before it was first used. The above mentioned American CERT issued a bulletin "The Continuing DoS Threat Posed by DNS Recursion" back in 2005. It said that the large number of recursive DNS-resolvers appearing on the Internet, which respond to every Tom, Dick and Harry's DNS-requests, could lead to problems. Someone might want to send lots and lots of small DNS queries to recursive resolvers, and the outgoing traffic could become so large that it clogs the channels of these servers. And that's a threat to the DNS infrastructure.

Less than a year after this newsletter, the then-largest attack (and possibly the largest in history) hit the root DNS infrastructure, Top Level Domains (.com, .uk, etc.) and .ru DNS servers. The latter were about 10 at the time (there are 13 now). One way or another, the infrastructure in one case was completely out of order and partially - in the others. The traffic generated in this way was already reaching gigabit values, surpassing the psychological threshold (2.4 Gbps at peak, 14 minutes of attack on TLD).

But what is interesting here is the fact that the attackers went a little further than the researchers from CERT. They thought: why stuff name servers with outgoing traffic when the real joke is that they can substitute the IP of someone they don't like in the DNS query? Then all these DNS responses will be directed to his host machine. Even if each server generates a little, the victim still cannot survive in such conditions. Because the number of responses will be multiplied by the traffic they generate and by the amplification factor. Amplification determines by how many times what the attacker will send differs in size from what the DNS server will respond.

From that moment on, attacks like DNS Amplification, as well as all their cheerful brethren, have firmly entered our lives. Literally any application protocol that is based on UDP, be it LDAP, SSDP, NTP, RIPv1, MOTD (Message of the Day), is a DDoS vector not only potentially, but also really – there are many examples of real attacks for each of them.

2007–2010: The rise of DDoS hacktivism

How were botnets usually assembled those days? In addition to traditional Trojans that steal data or encrypt the machine, it turned out that planting DDoS bots was possible on to the machines of those who clicked on the attachment in the mail. Thus, home and office Windows machines became very popular botnet participants in those days. But botnets were formed not only in this way, but also with the consent of the users themselves.

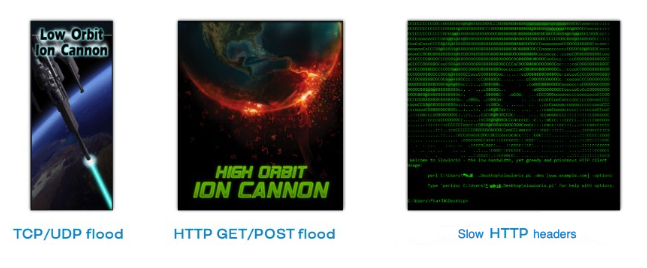

Hacktivism is an attempt to state political demands and protests using cybercrime methods, in particular, DDoS. DDoS hacktivists were users who voluntarily installed utilities on their personal machines. These utilities started generating traffic at the push of a button. It was only necessary to fill out the destination address, select the type of attack and press “start". Such utilities included Low Orbit Ion Cannon, High Orbit Ion Cannon (LOIC).

Another new tool in the box, the wonderful utility Slowloris, implemented a previously unseen type of attacks — slow attacks using HTTP. They open connections that are never closed. Headers are sent to the connections at the rate of several bytes per minute. There are a lot of these connections, and the server processes them until it exhausts its computing resources and freezes. Such slow attacks continue to this day in modified variants.

DDoS-hacktivism has been actively used to attack the main mouthpieces of the fight against piracy: associations of music and film content copyright holders, those who chastised WikiLeaks, and many others.

The next historical period is the evolution and active modification of protection methods.

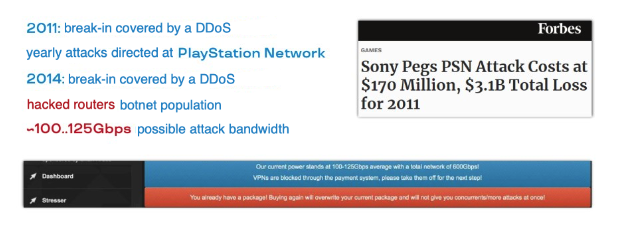

2011–2014: Sony’s misfortunes

Let's start with Sony, a company for which the period from 2011 to 2014 was not the best. Many factors overlapped: the earthquake in Japan, which destroyed part of the production, information leaks, the departure of key employees and court scandals. But DDoS was an important factor in this whole unfortunate story.

The attacks on Sony led to the realization that DDoS can be used not only to put down a resource, but also to cover up traces or hide the fact of hacking. For example, we launch a DDoS attack, the Firewall or the screen of a web application falls down, which is unable to handle such a load. It has to be disconnected, reconnected in parallel, or turned off altogether. At this point, you can organize a hacking attempt, get a shell, plant the desired backdoor. Then you carry out another DDoS attack in order for everything to go down again and to get the engineers busy fighting the DDoS, and not searching for where unauthorized access suddenly appeared.

The first such incident occurred in 2011. Sony was first DDoSed, then the PlayStation Network servers were hacked, forcing Sony to turn off the servers for more than 3 weeks. According to the company's calculations, it cost them more than 150 million in lost profits.

Attacks on the Sony PlayStation network continued every Christmas from 2011 to 2014. The last year is the cherry on the cake. Then there was a major DDoS attack on all the main Sony sites, as a result of which all sorts of things were leaked (film content, scripts, early versions of games, etc.).

The group that was Sony's nightmare most of the time was called Lizard Squad. It is interesting because they used a botnet farmed mainly from commodity routers in building entrances (on the home Internet). Moreover, they not only used it for their own attacks, but also rented it out to everyone. They claimed the power of their botnet at more than 100 GBit/s. At that time, it was a record indeed but, nevertheless, a psychological barrier, which was approached, but not yet surpassed.

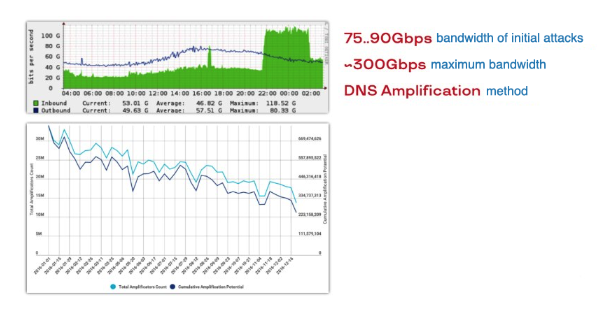

2013: Spamhaus attacks

The Spamhaus company, which fights spammers and other unseemly actors in a network, blacklisted the addresses of the then infamous hosting provider CyberBunker. The owners and users of CyberBunker turned out to be dissatisfied with this and, like earlier spammers in the case of PANIX, began to DDoS the hosts of Spamhaus.

After the first attack of about a hundred gigabits per second, Spamhaus realized that they could not handle it themselves and turned to the then-starting firm Cloudflare with its cloud. Cloudflare replied, "No problem!". They filtered 70 to 100 Gbit/s, wrote a report and sent graphs. Then CyberBunker sent three times more, and now Cloudflare was in trouble. Thus, in 2013, the threshold of 100 GBit/s was overcome for the first time.

Such an easy transition (from 70 to 300 Gbit/s) meant that hackers' ability to generate DNS Amplification attacks is limited only by trunk channels on the Internet. The greatest attack will be as big as the amount of traffic that can be driven through the backbone. There were no problems with the generation itself, with the use of public DNS resolvers to arrange amplifications.

The only good thing is that since then the number of DNS servers that can be used for Amplification has been steadily decreasing. There are a number of reasons for this: some close ports, others migrate to cloud DNS services and no longer need personal resolvers.

Perhaps at some point the graph will reach those values when it will be completely unprofitable to run DNS Amplification. But we are not there yet.

2010–2016: Development of protection services

When we talk about attacks, we must not forget about DDoS protection. How did it develop during that period?

It all started with boxes called Customer On-Premises Equipment. This is the type of equipment that is built into the customer's infrastructure. These are servers, specialized units that are able to quickly kick off bad traffic: let through only what is allowed and have a flexible rule configuration system. But it quickly became clear that only batteries of such boxes can cope with attacks of hundreds of gigabits and dozens of megapackets per second. They need to be put into the infrastructure in groups of 5, 10, 20 pieces, which means capital expenditures, licenses and unpredictable costs over time.

Therefore, it has become natural for a number of major players to move boxes to the network of a telecom operator. His connectivity is higher, the pipes are thicker, he can afford to keep a specialized traffic clearing center. Traffic directed to such a center will be filtered, and a number of clients who need DDoS protection can be connected to it. This is how operator protection appeared. The beginning of 2010 was the very moment when dedicated boxes from vendors like Arbor, Radware and Cisco began to move to large Internet service providers.

But a new problem arose — the attacks went far beyond 100Gbps, and not every operator could handle them on their backbone. In addition, the operator has a limited number of connection points, its own geography. Even if it is a Tier-1 provider, it usually covers a number of regions. Depending on the location of the attack, it may be difficult to send its traffic for cleaning through these limited joints.

This is how distributed filtering networks emerged. Many people call them clouds, although they are not exactly clouds organization-wise. These networks use connections to different providers, have a large number of cleaning centers in different regions, are able to receive a large number of DDoS traffic on their increased surface area – and process each packet, each bit that arrives in this network, so that only pure traffic reaches the clients who are protected by them.

This transition took about 5-6 years to complete. As in the case of attacks, all of these options coexist, have their own target audience and their own specific goals. The largest business players usually combine all three described types of protection.

In this episode of the story, the attacks continue to raise the bar in numbers and in public mind, affecting more and more people.

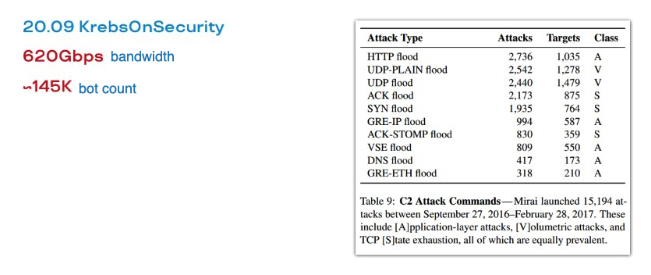

2016: Mirai and DDoS from a kettle

In 2016 it became clear that hacked computers or network devices can be botnet participants, but also any IPTV camera, a smart TV in your home and even a kettle with access to the Internet. The joke about “DDoS from a kettle” is much closer to reality than one might assume.

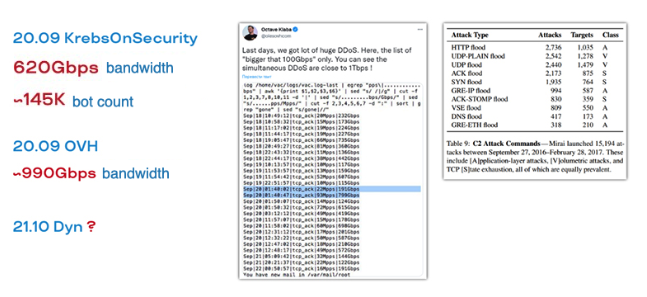

A real DDoS attack like that happened in 2016. The first wave targeted the website KrebsOnSecurity.com of cybersecurity researcher Brian Krebs, who had been investigating the actions of hackers for many years. The attackers used a botnet called Mirai — a wonderful piece of code written in C. The Mirai code has a huge number of different attack methods in his arsenal, all kinds of floods at the transport and application layer with cunning encapsulation to deceive DDoS filtering boxes. For example, GRE (tunneling) headers are used. Many boxes that saw Mirai traffic at that time recognized it as something important for the servers in the infrastructure, so they didn't filter it: they just skipped it. Thanks to that, many attacks have achieved their goal.

That attack immediately raised the bar up to 600 GB. The number of bots that were spotted in that attack is almost 150 thousand. And that was not the limit. Very soon there was an attack on the French hoster OVH, where the numbers reached terabits per second! With certain reservations, but it happened.

The attacks continued for some time with the same intensity. OVH managed to survive due to its gigantic channel capacity and rapid response. But the attack on the DNS provider Dyn knocked it out completely for quite a long time, and along with it around 14 thousand popular sites that used Dyn's DNS services. Almost the entire East Coast of the United States went down. It was the attack on Dyn that gave rise to the investigation and the trial, in which the authors of Mirai were found and called to order.

So, how was the Mirai botnet defeated? It would seem that IT devices are vulnerable, new devices appear every year, infecting them is as simple as that, so the botnet will just keep growing. But someone very smart and far-sighted leaked Mirai sources on GitHub. Thus, instead of Mirai, which could collect hundreds of thousands of bots in one attack, there appeared over a hundred similar botnets that fought for the same pool of hacked devices (vulnerable routers, cameras, kettles and pressure cookers). One large botnet collapsed into a bunch of small ones, and each of them individually could not have much effect. So the Mirai threat was destroyed on the principle of "divide and conquer".

2016: How long has DDoS been around as a service?

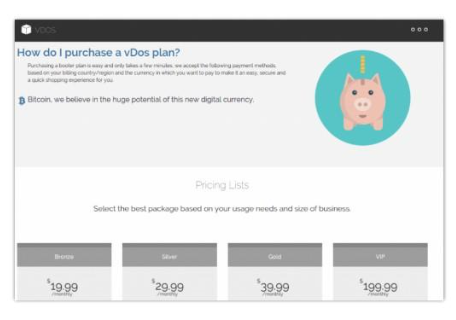

DDoS as a Service was also used by the guys who attacked Sony — Lizard Squad, but it was only after the Mirai attacks that DDoS as a Service became the talk of the town. These attacks showed that renting out a botnet for DDoS for a certain time is a popular method of earning money among cybercriminals. At the same time, you do not need to hire a hacker as such and negotiate with them to go and hack someone. It is enough just to rent a botnet of 50 thousand devices for a modest amount, and you can smother your victim's host with a huge garbage traffic for an hour. Easy-peasy!

Two Israeli teenagers organized the largest of the DDoS Services called vDos. It was exposed in 2016. But naturally, as it happens with any event that enters the mass consciousness, this one did not serve as a warning, but, on the contrary, as a call to action. Hundreds of other booters appeared to replace vDOS – DDoS botnet rental services – and they all went on the dark web and keep accepting orders there.

This period marks the emergence of new types of attacks. Before that, there was only a quantitative build-up of DDoS methods you are now familiar with.

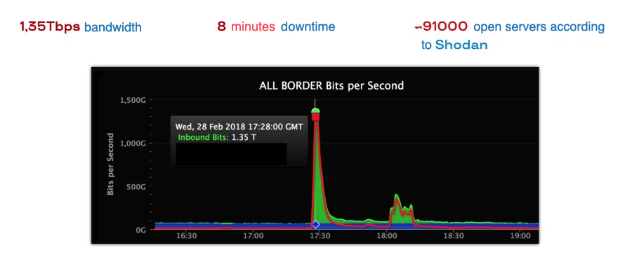

2018: Memcached amplification

The first surprise was the appearance of attacks with amplification, only not NTP or LDAP, but Memcached. It would seem that Memcached is a tool for caching content. A bunch of very large resources with an ample number of servers on Edge use Memcached, but it turns out that this tool can be exploited. If we request a large enough amount of data stored in cache and specify the victim's address as the recipient, Memcached will send garbage traffic, obeying the actions of the DDoSer.

The attack immediately peaked at record 1.35 TB. It was unprecedented!

The threat was not that the method was too complex or different from what we had already seen. The thing is that according to the figures from Shodan in 2018, the number of servers whose open ports could be used was about 90 thousand – all belonging to average organizations on the Internet that suspected absolutely nothing.

Naturally, work was started on a massive scale to close access to those servers and reduce the number of amplifiers. Our old good CERT, together with GitHub, who wrote a post-mortem on the attack, issued a number of recommendations. Here is their key advice:

But how do we work then? You cannot use TCP for caching everywhere while keeping the same performance metrics that you have in production. Fortunately, there were other ways to improve the situation. It turned out that a certain version of CentOS, for example, immediately opens the UDP port for the network when you install Memcached. This can be patched, a small revision of all the assets can be carried out and the hole mended this way.

Well, Memcached was handled one way or another, but literally a year later a new prank came along that no one had expected.

2019: TCP SYN-ACK Amplification

We talked about amplification based on UDP protocols: very easy to spoof, because nobody checks the source address, i.e. the sender of the traffic. The amplification factors are pretty large, too - multiplying the bandwidth and packet counts by tens and hundreds of times. To do the same with TCP would seem pointless – the amplification factor is incomparably less.

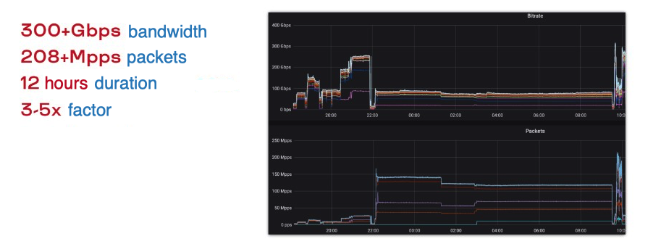

And here is the result:

Protecting its colleagues at Servers.com, Qrator Labs withstood an attack that lasted more than half a day. By amplifying SYN-ACK packets at the transport layer, the attackers unleashed 200 megapackets per second. That’s a lot. SYN floods of the time were only tens of megapackets (30-60 Mpps) per second.

The amplification factor of such an attack is really low. It works by re-sending identical segments when they are not received, not by increasing the payload of the segment being sent. That's why it wasn't very profitable to use it for broadband DDoS attacks for a while.

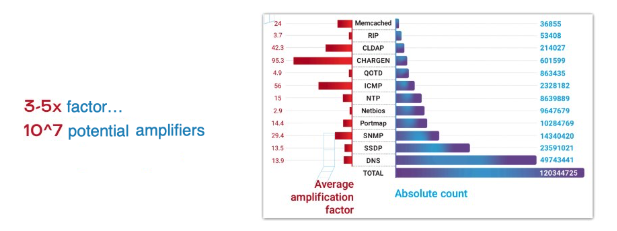

Here is the data from a Qrator Labs report on how many amplifiers and with what factors appeared in 2019 based on UDP:

We can see that amplification factors of tens or hundreds are quite achievable. But the number of such machines is decreasing, and by 2019 it became much more difficult to launch an ultra-wideband attack using them. Attackers have to compete for these resources. Given this fact, TCP SYN-ACK amplification attacks have suddenly become more profitable. They now occur quite often. And the number of potential hosts that can be used in this amplification, is comparable to the total number of UDP-based amplifiers across all protocols.

2021: Mēris on MikroTik routers

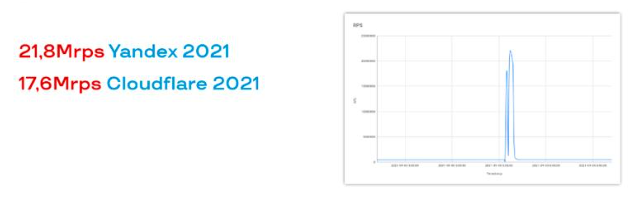

Literally the next case was something that was hard to expect. Someone infected hundreds of thousands of MikroTiks with the same bot and set them up with an application attack of tens of millions of requests per second.

This is a serious strain on any balancer, especially since it uses HTTPS – hence the additional cost of TLS encryption. No one is likely to survive such an attack, except very large networks and specialized providers.

Yandex handled the attack with honor and shared the data with Qrator Labs. Qrator Labs named the botnet Mēris (the Latvian for ‘plague’), because the effect of such an attack on any infrastructure is comparable to a plague epidemic.

Cloudflare managed to withstand a similar attack. Immediately a public administrative effort began to inform MikroTik owners that they urgently needed to patch their outdated RouterOS - the vulnerability had already been known for several years. Qrator Labs released a patch on their resources to check: “Are you a member of the Mēris botnet, has your IP appeared in its DDoS attacks?”.

However, it turned out that Mēris' capabilities were only partially unveiled in 2021, because the very next year Google was hit by twice as tough an attack of the same type. Therefore, Mēris, like the plague, continues to march around the world's Internet gaining loyal followers.

What have we known and what have we learned?

Share your experience and expectations regarding DDoS protection. Your answers will help us tailor solutions to meet your cybersecurity needs.

Tell us about your company’s infrastructure and critical systems. This will help us understand the scope of protection you require.

Help us learn about how decisions are made in your company. This information will guide us in offering the most relevant solutions.

Let us know what drives your choices when it comes to DDoS protection. Your input will help us focus on what matters most to you.